Inference and insinuations

A little meander through the AI inference onslaught

inference getting more efficient (and hooray for Google’s TPUs)

no inference wall, yet

inference took my SaaS? More scenes from the front lines

inference took my IPO?

inference attack on white collar jobs?

👉👉👉Reminder to sign up for the Weekly Recap only, if daily emails is too much. Find me on twitter, for more fun. 👋👋👋Random Walk has been piloting some other initiatives and now would like to hear from broader universe of you:

(1) 🛎️ Schedule a time to chat with me. I want to know what would be valuable to you.

(2) 💡 Find out more about Random Walk Idea Dinners. High-Signal Serendipity.Inference and insinuations

Picking up on “tech-driven productivity gains,” there’s another thing that tech is rapidly making more efficient: the cost of “inference.”

“Inference” is the agreed industry term for the sort of “thinking” that trained models do on relatively unfamiliar territory. Inference isn’t just telling you what the model already “knows” (from its training data), but what it is able to infer about new data, scenarios, problems, etc.

It’s a bit more like functional intelligence in that regard, and it’s kind of a big deal.

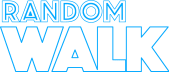

Inference is also the thing that AI is going to need to do to be useful, and it’s where the compute action is expected to live:

Inference “could make up more than 40% of data center demand in 2030, growing 35% CAGR.”

Right now, model training and inference are about equal, in terms of what people are using data centers for. In the near future, inference may comprise ~50% more GPU demand than training.

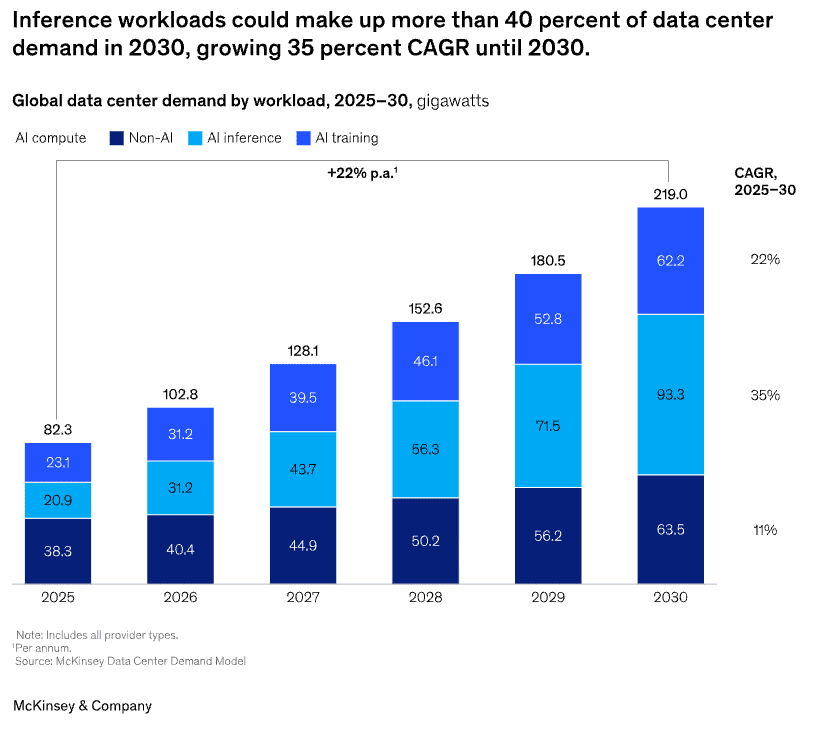

Inference compute is also apparently getting a lot cheaper:

Inference costs for the leading chip-makers have been declining steadily for the past ~3 years.

Nvidia’s chips are getting the cheapest, but Google’s TPUs are cheapafying at the quickest rate. AMD is the laggard.

Goldman’s point in making this chart was to imply some growing competitive advantages for Google (and hooray Google), relative to the field.

My point is to continue to expect a Jevonish relationship between cost and demand: as inference gets cheaper, there will be more and more demand for inference. That machines will do more and more of the “thinking” for all of us, seems inevitable, and the rate and pace of change is accelerating. think.

ICYMI

AI inferring better and more

Contrary to the ‘AI is hitting a wall’ claims, there is apparently, no wall, as of yet.